The last article looked at behind the scenes details of Ather’s automated vehicle Over the Air (OTA) testing which ensures each software release enhances the Ather experience. This rigorous process forms the foundation of Ather’s software testing.

This article explores how Ather’s software testing addresses the challenges of hardware-dependence to build a scalable, stable, and secure testing environment for OTA. A key challenge in software testing across hardware variants is rapidly deploying automated tests across multiple devices - whether running numerous regression tests or evaluating new hardware configurations.

This would mean a solution that meets the following set of requirements.

Frictionless Deployment - The primary objective is to maximise time spent on effective test automation while minimising time spent developing distribution-related abstraction layers.

Cross-Platform Compatibility - The need is to ensure smooth deployment and execution across all platforms by minimising or eliminating platform-specific bottlenecks.

Seamless Sync - With automation code deployed across teams in multiple labs, it is crucial to keep deployments synchronised with automation releases in near real-time.

Zero Latency - Automation scripts communicate with multiple hardware components while simultaneously streaming test data (logs, CAN communication, etc.), requiring a solution with no latency delays.

Ather Test Stack - Ather’s in-house software testing framework has steadily evolved and migrated from code mirroring to server client model and finally to a scalable robust solution using Docker to ensure seamless scaling of test automation. The journey so far while navigating through various architectures and technologies has proved insightful.

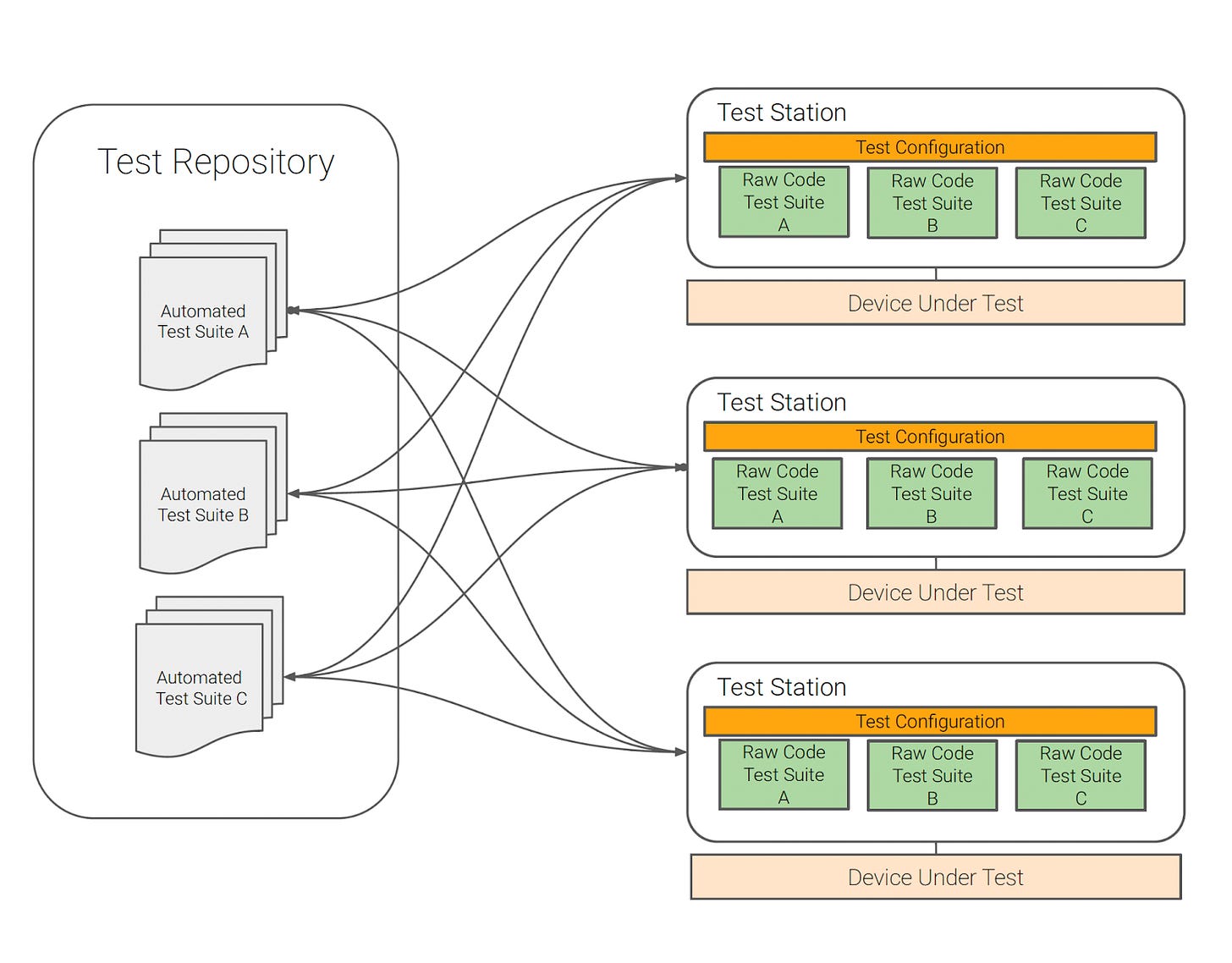

Phase 1: Code Mirroring

Initially Ather’s software testing adopted a raw code mirroring and deployment solution: where automation code is cloned locally and executed as is with the manifold increase in automation and subsequent complexity, following challenges were faced:

Code Synchronisation - As automation scaled, managing code revisions across testing stations for minor updates and platform-specific adjustments became increasingly difficult. It is crucial for code changes to be instantly reflected across all instances using the test code. Even one unsynchronised instance led to inaccurate test data and results, causing confusion. The process of tracking and ensuring synchronised code across instances proved to be both time-consuming and inefficient.

Cross-Platform Compatibility - Duplicating code across diverse hardware and software platforms necessitates platform-specific libraries and compilations, leading to increased maintenance and unnecessary bloat. Ather's software team started running into this as a significant hurdle when scaling automation across diverse hardware configurations, from desktops to Raspberry Pis; this presented a major challenge.

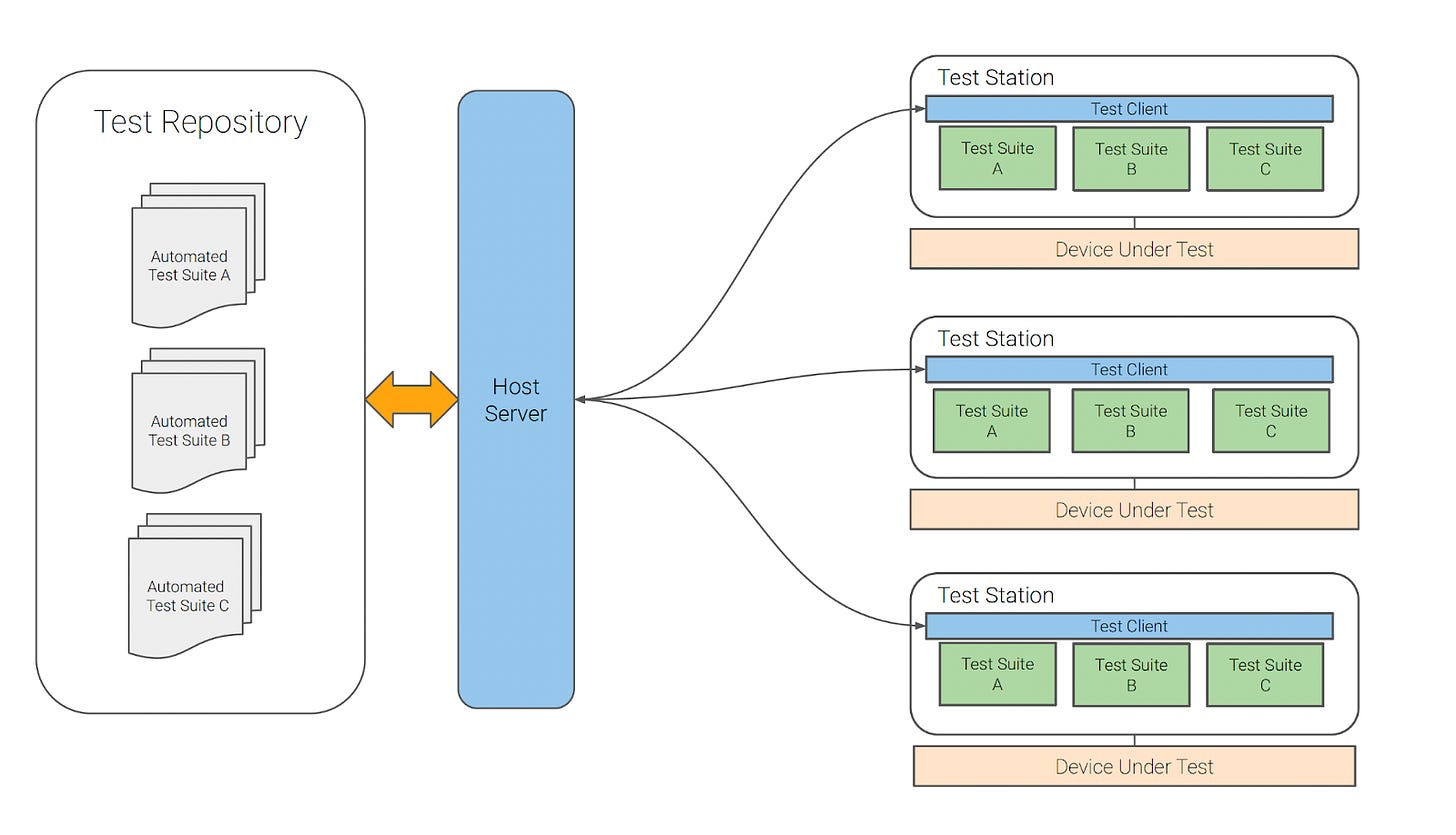

Phase 2: Server-Client Model

With the challenges posed by code mirroring, Ather’s software testing adopted a solution where automation code is hosted and executed on test station hosts serving as clients through instructions from the server. While automation code versioning is much more easier with server-client architecture, the challenges with this approach became apparent overtime in the following ways:

Single Point Failure - Server-side failures pose a significant risk, as they can disrupt automation test flow and create client dependencies on server availability. During instances of sudden server side failure, automated testing across many variants running over multiple days was severely impacted.

Hardware Sensitive Timing - As detailed in the OTA automation article, Ather's test verification setup is elaborate and involves real-time interactions with multiple hardware components and test applications, making automation test execution complex. Consequently, the server-client infrastructure’s ability to handle real-time traffic from multiple test setups simultaneously became a complex undertaking with even minor lags resulting in inaccuracies leading to false positives or false negatives.

Latency Bottlenecks - At Ather, software test automations run continuously across multiple hardware systems, including HIL and SIL platforms, requiring large available network bandwidth. Any latency or packet loss between client and server can produce anomalies in test results.

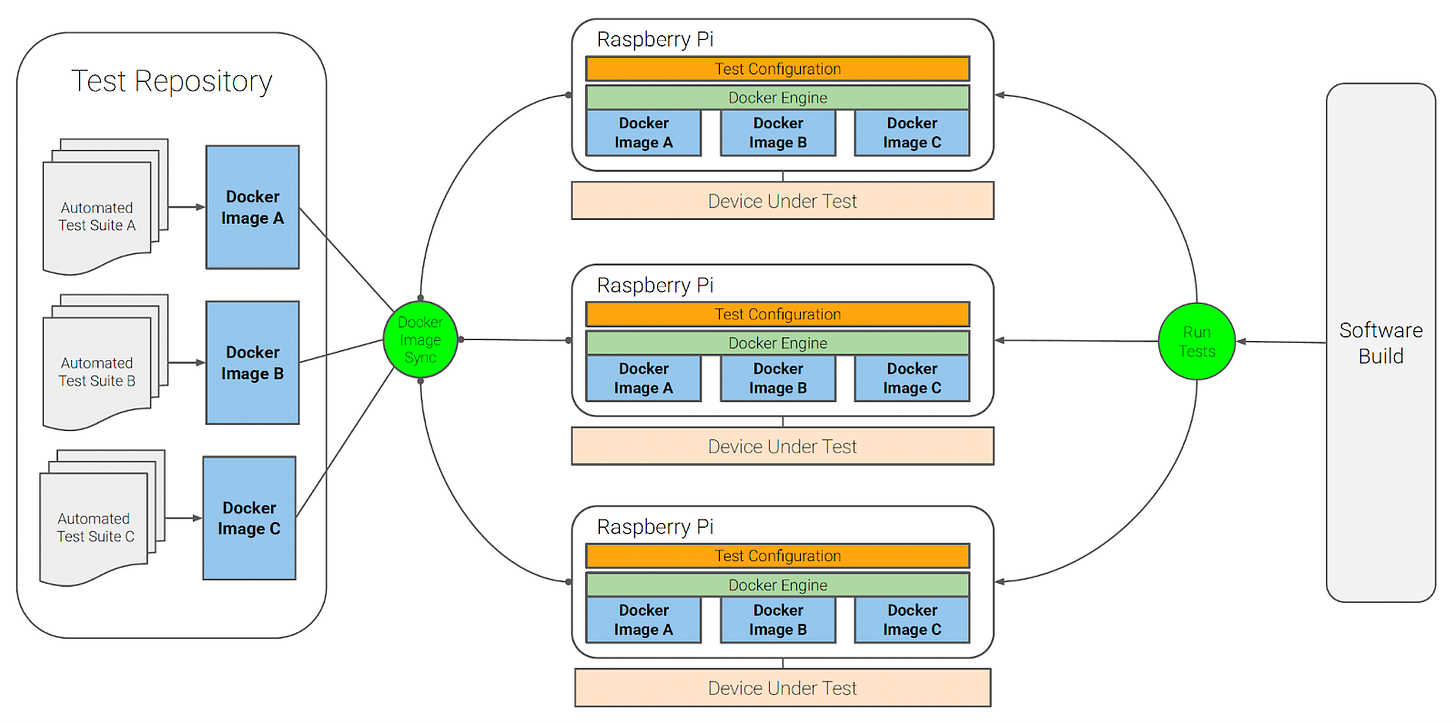

Phase 3: Docker as a Solution

With the knowledge gained from both the above approaches, Ather's software testing adopted a multi-platform distribution solution successfully used in the DevOps domain - Docker. Docker containers offer the advantage of being -

Self-contained - Each container includes everything it needs to function, with no dependency on pre-installed software on the host machine

Isolated - Containers run independently, minimising their impact on the host system and other containers, which enhances application security

Independent - Each container is managed separately, so removing one won't affect the others

Portable - Containers run consistently anywhere - from your development machine to data centers to cloud environments

Though adopting and scaling automation using Docker required Ather’s software testing to overcome a few challenges in understanding and earning container solutions, the advantages when scaling OTA automation became apparent in the following ways.

Code Synchronisation - Test automation code, once committed to release, automatically updates Docker test images across all host devices and also minimises configuration drift.

Multi-platform development - While Docker containers run tests within their own environment hosted on Raspberry Pis, development of automation code continues simultaneously on multiple platforms, giving Ather's automation engineers significant flexibility.

Security - Docker engines enhance security by supporting signed images and providing controlled access capabilities.

Zero latency - With automation code running in containers hosted on Raspberry Pis physically connected to test devices, there is virtually no latency.

Scaling OTA Testing - An Example

For OTA testing, a single Docker image is built which encapsulates the entire test. It comprises:

A lightweight Docker base Image.

The necessary PeakCAN drivers and ADB tools.

The Python runtime and all required libraries.

OTA test automation scripts.

Running this image as a container, creates a self-sufficient, identical testing environment that is executed on machines supporting Docker with the following key elements.

Docker Image - A blueprint that defines the steps to assemble the OTA automation container.

Test Configuration - The input file containing all necessary prerequisites and parameters that are set up before test execution.

Build Integration - The final step in ensuring that automation is triggered as part of the build pipeline.

Conclusion

Scaling Vehicle OTA testing using docker is another example of Ather's commitment to rigorous software testing and the development of unique solutions through the deployment of advanced automation and strategic solutions and thereby continuing to push the boundaries of innovation in the EV industry. All of this not only enhances the end-user experience but also sets a benchmark for testing excellence.

Upcoming Topics

Our forthcoming article will delve into how Ather is leveraging Artificial Intelligence and shedding light on how AI-driven tools and techniques are improving efficiency, accuracy and depth of testing. The article will also provide a deep dive into the specific AI frameworks and proprietary solutions that Ather has implemented to achieve a more sophisticated and scalable testing infrastructure.